2019-2020 Google, Facebook, Neuralink Sued for Weaponized AI Tech Transfer, Complicity to Genocide in China and Endangering Humanity with Misuse of AI

Misuse of artificial intelligence, cybernetics, robotics, biometrics, bio-engineering, 5g and quantum computing technology. Endangering the human race with the misuse of artificial intelligence technology

This is the most significant and important lawsuit of the 21st century, and it impacts the entire world. CEO’s and Founders Mark Zuckerberg, Elon Musk, Sergey Brin, Larry Page and Sandar Pichai are also named as defendants along with their companies.

Endangering Humanity with the misuse of Artificial Intelligence, Complicity and Aiding in Physical Genocide inside of China by transferring AI Technology, Engaging in Cultural Genocide of Humanity, & Controlling and programming the Human Race by Social Engineering via AI coding and AI algorithmic biometric manipulation.

This is phase 1 of first lawsuit. We are open for support at a global level. We have a network of thousands around the world and tens of thousands in China, who are witnesses and have been harmed in China from the defendants technology and data transfer.The following are Federal Case Compliant Summary Facts Extracted from the official document filed in San Diego, California. To find out details of financial, personal and corrective behavioral demands, you may access the case in the federal court data base.

Defendants: Google L.L.C., Facebook Inc, DeepMind Inc, Alphabet Inc, Neuralink Inc, tesla Inc, Larry Page, Sergey Brin, Sundar Pichai, Mark Zuckerbereg, Elon Musk, CISON PR NewsWire & John Doe‘s 1-19

Limits in the Czech Republic

Limits valid until 1999: 4.3 V / m.

Limits valid since 2000: 41 V / m.

And since 2015: 127 V / m for the 1800 MHz GSM band!

Recommended health limit: Up to 0.1 V / m!

Government use only, 3 August 1977 – Translation on USSR Science and Technology, Biomedical Sciences – Effects of Nonionizing Electromagnetic Radiation

The report contains information o aerospace medicine, agrotechnology, bionics and bioacoustics, biochemistry, biophysics, environmental and ecological problems, food technology, microbiology, epidemiology and immunology, marine biology, military medicine, physiology, public health, toxicology, radiobiology, veterinary medicine, behavior science, human engineering, psychology, psychiatry and related fields, and scientists and scientific organizations in biomedical fields.

It was reported in numerous works of the 1950’s and 1960’s (Ye. V. Gembitskiy; N. A. Osipov; A. A. Orlova; N. V. Uspenskav) that there are hemodynamic (dynamics of blood flow) changes of a vagotonic nature, usually evaluated as specific reactions of the organism to radiowaves. Vagotonic vegetovascular reactions were demonstrated primarily with exposure to super high frequency (SHF) electromagnetic fields of the order of hundreds of microwatts per sq cm to a few milliwatts per sq cm. Several authors (E. A. Drcgichina and M. N. Sadchikova; N. V. Tyagin; P. I. Fotanov and others) observed asthenic (neurasthenic) manifestations. Vegetovascular changes related to increased excitability of the sympathetic branch of the autonomic nervous system, and lability of arterial pressure with a tendency toward hypertensive or hypotensive reactions in individuals exposed to SHF (microwave) electromagnetic radiation for long periods of time. There are indications of possible development of neurocirculary disorders of the hypertensive type under the influence of microwaves of up to hundreds of microwatts per sq cm in publications of the last few years (G. G. Lysin; V. P. Medvedev; M. N. Sadchikova and K. V. Nikonova, and others).

The objective of the present work was to study vascular tonus in individuals whose work involved exposure to low intensity microwaves. A total of 885 workers in the radio and electronic industries were submitted to a polyclinical examinations; 353 people (275 men and 78 women) were in contact with microwave sources and 532 people (411 men and 121 women) made up the control group. We analysed the data of preliminary and periodic physicals on the basis of outpatient charts. A total of 68 people were submitted to a comprehensive workup in the hospital. The subjects ranged in age from 24 to 49 years. Their occupations were as follows: adjusters, engineers, technicians, testers and fitter-electricians.

Defense Intelligence Agency, March 1976 – Biological Effects of Electromagnetic Radiation (Radiowaves and Microwaves) Eurosian Communist Countries (U)

This is a Department of Defense Intelligence Document prepared by the US Army Medical Intelligence and Information Agency and approved by the Directorate for Scientific and Technical Intelligence of the Defense Intelligence Agency

The purpose of this review is to provide information necessary to assess human vulnerability, protection materials, and methods applicable to military operations. The study provides an insight on the current research capabilities of these countries. Information on trends is presented when feasible and supportable.

The study discusses the biological effects of electromagnetic radiation in the radio- and microwave ranges (up through 300000 megahertz ).

More than 2000 Documents prior to 1972 on Bioeffects of Radio Frequency Radiation

More than 2000 references on the biological responses to radio frequency and microwave radiation, published up to June 1971, are included in the bibliography.* Particular attention has been paid to the effects on man of non-ionizing radiation at these frequencies. The citations are arranged alphabetically by author, and contain as much information as possible so as to assure effective retrieval of the original documents. An outline of the effects which have been attributed to radio frequency and microwave radiation is also part of the report.

Glaser, Z.R. 1972. Bibliography of reported biological phenomena (‘effects’) and clinical manifestations attributed to microwave and radio-frequency radiation.

The value of the Glaser 1972 document is to counter the statements that “credible” research does not exist showing non-thermal effects. This is a false statement promoted by those who are either unaware of the literature or unwilling to admit this radiation, at levels to which we are currently exposed, can be harmful.

Credible research does exist; it has been around for decades; and it has been largely ignored by those responsible for public and occupational health.

Key Words: Biological Effects, Non-Ionizing Radiation, Radar Hazards, Radio Frequency Radiation, Microwave Radiation, Health Hazards, Bibliography, Electromagnetic Radiation Injury

This is one of the first large scale reviews of the literature on the biological effects of microwave and radio frequency radiation and it first appeared in 1971. The author classified the biological effects, into 17 categories (see below). These categories include heating (thermal effects); changes in physiologic function; alterations of the central, autonomic and peripheral nervous systems; psychological disorders; behavioral changes (animal studies); blood and vascular disorders; enzyme and other biochemical changes; metabolic, gastro-intestional, and hormonal disorders; histological changes; genetic and chromosomal effects; the pearl-change effect (related to orientation in bacteria and animals); and a miscellaneous group of symptoms that didn’t fit into the above categories.

While it is clear that radiation that causes heating can also cause secondary effects, not all the effects listed above are heat-related. Indeed, much of the literature at the lower exposure levels is unrelated to heating. This is the type of research that helped regulators to formulate their microwave guidelines. The non-thermal studies have been ignored by the World Health Organization, upon which many countries look for guidence, and hence the guidelines differ by orders of magnitude from the lowest in Salzburg, Austria (0.1 microW/cm2) to the highest (5,000 microW/cm2 for occupational exposure) established by ICNIRP (International Commission on Non-Ionizing Radiation). This is a 50,000 times difference!

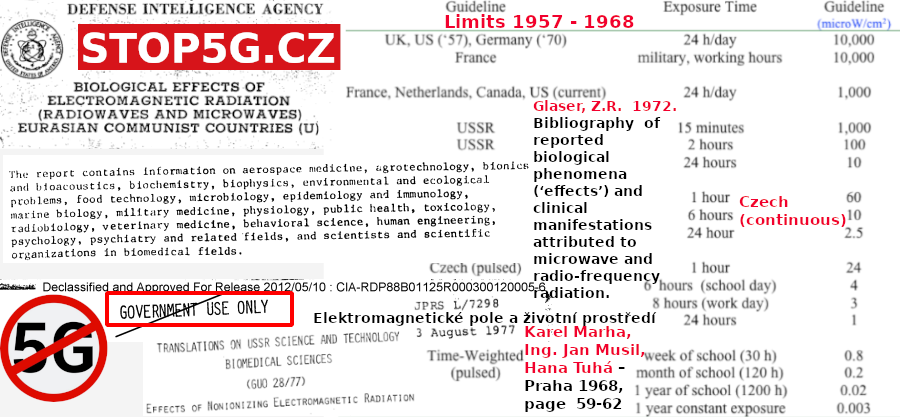

Review of International Microwave Exposure Guidelines from 1957 to 1968

Swanson and colleagues from the International Labour Office (Geneva, Switzerland) and the Bureau of Occupational Safety and Health, Public Health Services (Cincinnati, Ohio) reviewed guidelines for microwave radiation and published their review in the American Industrial Hygiene Association Journal, Vol. 31: 623-629 (1970). Click here to download a pdf of this article.

Below is some information from this article. My comments appear in square brackets. To convert from mW/cm2 to microW/cm2 multiple by 1000.

United States

1. From 1940s to 1970s the use of microwave emitting equipment had increased considerably.

2. In the United States radio frequencies (RF) from 10 to 10,000 MHz were classified as microwave radiation, while in Europe the range was from 300 to 300,000 MHz. [NOTE: We now use the European range to delineate the microwave part of the radio frequency spectrum.]

3. By 1970, scientists recognized that parts of the body that are unable to dissipate heat are the most vulnerable to microwave radiation. This includes the lens of the eye (cataracts) and the reproductive organs (sterility or degenerative changes).

4. Depth of penetration of radiation into tissue is a function of frequency with greater penetration at lower frequencies.

5. In the United States the first guidelines were established during the Tri-Service conference, held in 1957. Below is a quote about the guidelines:

It was the opinion of those participating in the Conference that there were not sufficient data to determine safe exposure levels for each frequency, or ranges of frequencies, within the microwave region; therefore, a level of 10 mW/cm2 [10,000 microW/cm2] was selected for all frequencies. The U.S. Air Force, in adopting this exposure level in May 1958, applied it to the frequency range of 300 to 30,000 MHz and established it as a maximum permissible exposure level, which could not be exceeded. The only factor considered in this criterion is the power density level. Such factors as time of exposure, ambient environmental temperatures that could have an increased or decreased effect on the body’s thermal response, the frequency of the microwave energy, effects of multifrequency exposures, differing sensitivity of various body organs, and effect of air currents on cooling the body are not considered, although they are all recognized as factors that might affect biological response.

[NOTE: It was clear in 1970 that the US guidelines were somewhat arbitrary, were based on thermal effects only, and did not include other factors that influence biological and health consequences. This guideline has since been lowered from 10 to 1 mW/cm2 but is still 100 to 1000 times higher than guidelines in other countries.]

UK, West Germany, France and Netherlands

6. Guidelines in the UK and in West Germany allowed citizens to be exposed to 10 mW/cm2 (same as in U.S).

7. In France only military personnel during working hours were allowed to be exposed to 10 mW/cm2. In rest areas and in public areas the guidelines were 1 mW/cm2.

8. In the Netherlands the guidelines were at 1 mW/cm2.

Poland, USSR, Czechoslovakia

9. Guidelines in the eastern European Block countries were much more protective than those in western countries.

Poland

10. Polish guidelines, established in 1961 and 1963, were as follows:

- 10 microW/cm2 [0.01 mW/cm2] – no limitation for time of work or sojourn in this field.

- 10 and 100 microW/cm2 [0.01 and 0.1 mW/cm2]- cumulative time of work or sojourn not to exceed 2 hours in every 24 hours

- 100 and 1000 microW/cm2 [0.1 and 1 mW/cm2]- cumulative time of work or sojourn not to exceed 20 minutes in 24 hours.

11. The Polish regulation requires an annual medical examination for exposed workers including neurological and ophthalmological examinations; safe placement of microwave generating installations; protective screening; personnel protection; site surveillance; and safety education.

12. The Polish regulation forbids work with microwave radiation for young people (age not provided), pregnant women, and other people suffering from certain diseases, which are listed in the regulation.

USSR

13. The USSR standards were based on time of exposure as follows:

- 10 microW/cm2 [0.01 mW/cm2] for a working day

- 100 microW/cm2 [0.1 mW/cm2] for 2 hours daily

- 1000 microW/cm2 [1 mW/cm2] for 15 minutes daily [so at 1000 microW/cm2 the Soviets could be exposed for only 15 minutes, the Poles for only 20 minutes but the Americans could be exposed for 24 hours each day!

14. The U.S.S.R. is also one of the first to propose exposure standards for intermediate-frequency electromagnetic radiation [dirty electricity], which heretofore had been considered as having no effect on the human body. These levels are:

- Medium wave (100 kHz – 3 MHz) – 20 volts/ meter [29 microW/cm2]

- Short wave (3 MHz- 30 MHz)- 5 volts/ meter [1.8 microW/cm2]

- Ultra short wave (30 MHz- 300 MHz)- 5 volts/ meter [1.8 microW/cm2]

[NOTE: The WHO has recently recognized the importance of intermediate frequencies (IF) and the information they provide is severely limited].

15. Medical examinations are regulated in the Soviet Union for persons exposed to electromagnetic radiation. Medical counter indications are enforced so that workers are not allowed to be exposed to microwave radiation if specified diseases exist. Heavy emphasis is placed on blood disorders, neurological disturbances, and chronic eye diseases.

16. Preventive measures of an engineering nature are used by Soviet health and epidemiological centers to ensure compliance with their health regulations. Decreasing the amount of radiated energy, reflective and absorptive screening, and personnel protection measures are widely used for personnel operating microwave equipment.

Czechoslovakia, 1965, above 300 MHz:

17. The following values are considered for the general population and other workers not employed in generation of electromagnetic energy as tolerable doses of radiation not to be exceeded at the person’s location during one calendar day :

- for continuous generation in the microwave frequencies- value = 60 where the energy is expressed in microwatts per square centimeter and the time in hours [(microW/cm2) X t (hours) < 60 ; therefore twenty-four hours exposure time corresponds to an average energy flow of 2.5 microW/cm2].

- for pulsed generation in the microwave frequencies- value = 24 where the energy is expressed in microwatts per square centimeter and the time in hours [(microW/cm2) X t(hours) < 24; therefore twenty- four hours exposure corresponds to an average pulsed energy flow of 1 microW/cm2].

18. The final point that is worth noting is the authors’ recommendation that “in applying the concept of a time-weighted exposure the health specialist must consider how far the dose- time relationship can be extrapolated.”

Extrapolation of the dose-time relationship.

Both cell phones and WiFi routers use pulsed microwave radiation and it is well known that pulsed microwave radiation is more harmful than continuous wave radiation. If we apply the Czech time-weighted concept for pulsed radiation we get the following (see last four rows in table 1). These values begin to approach the Salzburg recommended guidelines for outdoor (0.1 microW/cm2) and indoor (0.01 microW/cm2) exposure.

Early Research on the Biological Effects of Microwave Radiation: 1940-1960

The history of research on the biological effects of microwave radiation effectively begins with the development of radar early in World War II, and the concerns that arose thereafter within industrial and military circles over the possible deleterious effects this new source of environmental energy could have on personnel. Prior to this time, the energy levels which microwaves had been produced were not sufficient to cause widespread concert about harmful effects. Before the invention of radar, artificially produced microwave energy was not a general environmental problem.

However, as this field of research began to take shape, it did not do so in a vacuum. Well before the invention of radar, medical researchers had been interested in the controlled effect of radio-frequency energy on living things. Once it was discovered that radio waves could be used to heat body tissue, research was undertaken to study how such heating took place and its effect on the whole organism.

Between the early 1940s and 1960, research on the biological effects of microwave radiation slowly shifted from its medical context and the search for benefits to a military-industrial context and the search for hazards. The consequence of this shift was twofold. First, it significantly altered the funding patterns and institutional setting in which the majority of research was conducted. Second, it resulted ultimately in the near abandonment of the field when, at the end of the first major research effort on biological effects, the Tri-Service program of the years 1957-1960, those setting policy had solved to their own satisfaction the major problems that concerned them and withdrew their support, leaving the field stranded until new sources for funding were found.

Background 1885-1940: early work on short-waves and therapy

Interest among researchers in the effects of electricity on biological systems arose almost as soon as electricity could be generated in a controlled form. This same interest rapidly shifted to research on the biological effects of electromagnetic radiation when, during the years 1885-1889, Heinrich Rudolf Hertz demonstrated a technique for propagating electromagnetic energy through space. Typical of this shift is the Parisian scientist Arene d‘Arsonval. Prior to the late 1880s, d‘Arsonval had devoted considerable time to the investigation of the physiological effects of electrocution. Shortly after learning about the new Hertzian apparatus, d‘Arsonval developed his own equipment, which produced 104 – 105 cm waves at power levels nearing 20 amp, and turned his attention to its possible physiological and medical uses. By 1893, he was publishing papers on the influence of radio waves on cells.

The encuing technique, called ‚diathermy‘ (or diathermotherapy) by von Zeyneck in 1908, spread rapidly and was greatly improved by A. W. Hull‘s invention of the magnetron tube in 1920. Hull‘s magnetron, which was developed at the General Electric Company laboratories in Schenectady, New York, was capable of producing ultrashort waves (waves under 100m were considered short, those in the 3-15 m range, ultra -short) at higher energy levels. It proved to be a convenient device for inducing local heating in tissue and was adopted as a recognized piece of medical apparatus.

Research on the new, ultra-short waves soon sparked a controversy that has enlivened the microwave field ever since. It was well known by the early 1920s that RF waves induced local heating, but the question soon arose whether heating was the only effect to be expected. A summary of the results of experiments conducted in France suggested that it was not. In a important article appearing in 1924 A.Gosset, A.Gutmann, G. Lakovsky and I. Magrou showed that by subjecting tumorous plants to ultra-short wavelengths, the tumors initially grew rapidly and then died. They believed that the effects were not due to heating alone, thereby sparking new investigations in the United States and elsewhere on what came to be known as ‚nonthermal‘ or ‚specific‘ effects.

The new investigations on nonthermal effects soon began to yield results. Work undertaken in 1924 and reported in 1926 by a surgeon with the United Stated Public Health Service, J. W. Schereschewsky (also wrote Problems of infant mortality), detected severe symptoms and lethal effects in mice exposed to ultra-short wave radiation that did not appear to be due to the production of heat. In 1928, having taken on a second post in Preventive Medicine and Hygiene at Harvard Medical School, Schereschewsky followed up his earlier studies with published reports that he could destroy malignant tumors in mice, again without much apparent heating. Such reports prompted a small but significant body of literature attempting to determine the nature of the supposed nonthermal effects.

At about the same time, Erwin Schliephake, working in Germany, found that condenser fields killed small animals such as flies. Rats and mice. These findings were reported in 1928. One year later, while suffering from a painful nasal furuncle, Schliephake turned the field on himself, with the result being the rapid improvement of his condition. As a consequence, he and his colleague in physics, Abraham Esau, began to advocate using short-wave current in the treatment of local pathology. Their advocacy was taken up by another school of medical researchers who saw possibilities for diathermy in fever therapy.

Fever therapy had as its objective raising a patient‘s temperature to help the natural forces of the body reject the disease organism. In January of year 1928 Helen Hosmer of the Department of Physiology of Albany Medical College had been called W. R. Whitney, director of General Electric‘s research laboratory in Schenectady, New York, to investigate he headaches and other unpleasant symptoms experienced by technical personnel working with new high-power, short-wave tubes being developed for short-wave radio. Helen Hosmer discovered that persons in the immediate vicinity of an operating tube could suffer 2-3 F increases in body temperature.

With the introduction of a medically accepted use for diathermy in 1928, the debate over thermal versus nonthermal effects began in earnest. It was not only contradictory scientific information that sparked debate over thermal versus nonthermal effects.

To summarize, it is clear from the literature that the scientific research that forms the immediate background to the investigation of the biological effects of microwave radiation was conducted primarily at medical schools by medical researchers who were interested in therapeutic application. The majority of these researchers assumed that the only demonstrated effects of that portion of the electromagnetic spectrum under investigation were thermal in nature. By the late 1930s, no established researchers maintained unqualified support for nonthermal effects. Moreover, as researchers looked ahead to the future, prospects for further developments appeared bright. The economic return on short-wave machines as well as short-wave radios caused the technical community to continue work looking for shorter wavelengths and higher powers. In 1937 the Triode was developed by Lee de Forest and the magnetron tube improved at Bell Telephone Laboratories. A breakthrough was engineered by researchers at Stanford when in March of 1939 they developed the Klystron tube. This allowed them to generate wavelengths as short as 10-40 cm at outputs of several hundred watts. The age of microwave radiation had arrived.

The war years and after, 1940-1953; Military interest in harmful effects.

The impact of the war years on research on the biological effects of electromagnetic radiation was rapid in coming and without a doubt important. Because radar was crucial to the war effort – it increased the capabilities of air warfare and was indispensable in keeping the sea lanes open. – research on radar technology received the same high priority as research on the atomic bomb. At the same time, reports of biological effects experienced by personnel exposed to radar – warning, baldness and temporary sterility being the most frequently mentioned – prompted the military to investigate the problem of possible hazards. The medical school context was replaced by the military laboratory.

The investigations undertaken by the military early in the war reflected the mixed background of the new microwave technology. Its higher energy levels and shorter wavelengths placed it in a category midway between the benefits of diathermy and the known hazards of ionizing radiation.But where between the two did it fall? The report issued by J. B. Trevor, a radio engineer, indicates that the technical advisors clearly viewed radar as little more than high-powered diathermy units.

That these early technical reports did not fully settle the problem of potential radar hazards is indicated by the fact, that they were soon followed by personnel surveys and laboratory tests that sought further clarification of the situation. Headaches and a ‘flushed feeling’ were reported, but felt to be quite unimportant. Otherwise, Daily reported, the physical and blood examinations were normal (it was badly tested), and no sterility or balding could be attributed to exposure to radar. The Air Force, then still part of the Army, also took an interest in confirming that radar was not harmful to personnel. Again, the major impetus behind these studies was the morale problem. As the first Army Air Force report remarked, ‘although field investigations cast doubt that radar waves were a dangerous form of electromagnetic radiation, rumors persisted that Sterilization or alopecia [baldness] might result from such exposure.

A next study, commissioned by the Army Air Force and published in the Air surgen‘s bulletin in December 1994 concentrated on hemotologic examinations of 124 personnel who had been exposed to radar. The results reported indicated that erythrocyte counts were lower in exposed personnel than the commonly accepted level, but they were felt to be ‚within the same range as that of the controls‘. The reticulocyte counts were ‘significantly higher in the exposed subjects than in the controls’, but these too were thought to be ‘within the normally accepted range’. The authors therefore concluded, perhaps not for fully justifiable reasons, that ‘no evidence was discovered which might indicate stimulation or depression of the erythropoietic and leukopoietic systems of personnel exposed to emanations from standard radar·seis over prolonged periods.

The few confirmed effects – headaches and flushing – were certainly not very serious in light of the importance of radar to the war effort.

J. F. Herrick had worked With the U.S. Signal Corps during the war on their technical studies of radar and was well acquainted with the work done at the Radiation Laboratory at MlT. Herrick’s excitement over microwave diathermy was partly the result of a discovery made during the war but kept secret–different parts of the atmosphere absorbed microwaves at different rates. This discovery suggested to Herrick that different tissues of the body might absorb microwave radiation at different rates.

The discovery of medical hazards

Just as the effort was getting underway in 1948 to study the possible uses of the new microwave diathermy, two groups of researchers, at the Mayo Clinic and the University oflowa, reported results that would have a drastic impact on the future of the field. Both groups discovered, apparently independently of each other’s work, hazardous effects that stemmed from microwave exposure.

Louis Daily, of the University of Minnesota and an affiliate of the Mayo Clinic, briefly described cataracts that appeared in dogs exposed to direct microwave radiation, at the same time that researchers at the University of Iowa, working under H. M. Hines, were publishing two longer articles describing lenticular opacities in rabbits and dogs and testicular degeneration in rats. Reaction to this work was slow in coming. Serious funding for hazards research would not become available on any major scale for another five years. But in the long run, the discoveries of 1948 set in motion once again a shift in research patterns from medical to military camps and from a focus on beneficial effects to a search for hazards.

Cataracts had long been known to result from heat, as evidenced by their unusual occurrence in glassblowers and chainmakers. They had also been produced experimentally by infrared radiation. In the process, he found that direct radiation of the eye at high intensities could produce cataracts.

ImPetus for the Iowa studies found its origin in a very different setting. The Iowa group was funded by Collins Radio Company at Cedar Rapids, Iowa, who in tum were subcontractors for the Air Force’s Rand Corporation. The project that Collins Radio had undertaken for the Air Force was to explore the possibility of using microwaves to transmit power over long distances. (In 1949, an engineer from Collins Radio demonstrated to the public the feasibility of such transmission by setting up a device at the Iowa State Fair that could pop popcorn in a bag. The effect such a device could have on humans was of sufficient concern to researchers at Collins Radio to prompt them to seek the advice of the Department of Physiology at the nearby university.

Using the newly developed Raytheon microtherm, two groups of researchers at Iowa, working under the direction of H.M. Hines, began the search for hazards sometime in 1947. Since it was a common place that microwaves generated heat in tissues, they proceeded on the assumption that physiological damage would most likely occur in avascular areas, where the blood could not as efficiently carry off excess heat. This made the eye an obvious choice for investigation. As one of the papers put it, ‘it was thought that since the center of the eye is a relatively avascular area it might be less capable of heat dissipation than other more vascular areas. Moreover, since heat was also known to cause testicular degeneration and since the testicles are also relatively avascular, the effects of microwave radiation on that area of the body was looked into as well. In the final in-house paper presented to Collins Radio, the researchers reported that in both areas ‘definite evidence has been found that injury may occur at relatively low field intensity.

The new-found evidence of injury gave a few of those who were aware of the findings cause for concern. Microwave radiation exposure was as yet unregulated. No standards existed to control the exposure of personnel to the possible dangers of microwave radiation. Lacking controls, researchers at Collins Radio warned in a report published in Electronics in 1949 that ‘microwave radiation should be treated with the same respect as are other energetic radiations such as X-rays, alfa-rays, and neutrons’. Again, Collins-Radio spokesman, John W. Clark, painted the way: ‘The work which has been described is of a very preliminary nature. We have definitely established that it is possible to produce serious tissue damage with moderate amounts of microwave energy but have no idea of the threshold energy, if one exists, for these phenomena. Further work along these lines is urgently needed.

Daily‘s work at the Mayo Clinic continued, as did a portion of the Iowa research that was picked up by the Office of Naval Research, when the Rand Corporation ended its subcontract with CoJlins Radio in 1948.

Renewed concern, 1953-1957: worries of industry

At this time, John T. McLaughlin a physician and medical consultant at Hughes Aircraft Corporation in Cufver~City, California., had brought to his attention a case of Purpura hemorrhagica (internal bleeding) suffered by a young male employee who had required hospitalization. The hospital called McLaughlin to ask with what the young man had been irradiated, since his blood picture resembled that often seen in mild radiation cases. McLaughlin came to suspect that microwave radiation might have been the cause, and by early 1953 had turned up an estimated 75 to 100 cases of purpura at Hughes out of a total work force of about 6,000. Some cases showed extensive internal bleeding and needed hospitalization and transfusion. Investigating further, he found questionable cases of cataracts among employees of Hughes in addition to a ‘universal complaint of headaches by personnel working in the vicinity of microwave radiation‘.

Also John E. Boysen’s investigations into the effects of whole body irradiation of rabbits eventually convinced the Command that microwave radiation ought to be completely reviewed. Officers at the Aero-Medical Laboratory, Wright Field, Ohio, of the Army Air Force, were talking about beginning a research project in the field and had assigned Captain R. W Ballard look into it. In sum, McLaughlin found that he was not alone in his belief that more research on hazards was needed.

In addition McLaughlin collected orally other pieces of information which produced an alarming picture. One of his contacts reported two cases of leukemia among 600 radar operators and radiation illness from the soft X-ray radiation of unshielded cathode tubes. He also was informed that among one group of five men doing research on microwave radiation, two had died of brain tumors within a year and one had suffered a severe heart condition.

The Tri-Service era: 1957-1960

Direct military involvement in research on the biological effects of microwave radiation at first took shape slowly after the 1953 Navy Conference and the ARDC directive of the same year. Two avenues were selected to initiate research, one through existing military research facilities, the other through subcontracts with university scientists. In both cases, however, the major emphasis, like that in medical and industrial circles during the same period, was aimed predominantly at the problem of hazards and the one issue regarding hazards that above all concerned the military-the exposure level at which injuries could be expected to appear. (By the mid-1950s, no one who fully understood the research that bad been conducted doubted that injuries would occur if the dose levels were high enough.) This was the issue that brought the military into the business of funding basic research in this area, and it is the issue whose resolution (or at least assumed resolution) brought that funding near to an end by 1960.